Introduction to Programming

At its core, programming is the act of instructing a machine on how to perform a specific task. It’s like teaching your dog to fetch, but in this case, the dog is your computer, and the ball might be, let’s say, displaying a photo on your screen.

Now, you might think that programming is just writing lines of code. Programming is actually a broader process that not only includes writing code but also problem-solving, system design, and logical thinking.

In the universe of programming, there are high-level and low-level languages. A low-level language, like assembly, is closer to what the machine understands, while a high-level language, such as Python or JavaScript, is more human-friendly. Picture having a conversation: high-level languages are like chatting with a friend over coffee in New York, while low-level languages are like trying to communicate with someone speaking a very specific, localized dialect.

Additionally, some programming languages are compiled, while others are interpreted. If a language is compiled, it means it’s translated into a machine-understandable language before being executed. On the other hand, interpreted languages are translated in real-time, as they run.

A brief history of Programming

Programming isn’t a new concept. In fact, it’s been with us long before computers existed in the form we know today. Devices like the abacus and the astrolabe are early examples of tools we used for intricate calculations.

However, it was with the advent of mechanical machines, like Charles Babbage’s Analytical Engine, that the foundation for modern programming was laid. We’re talking about the 19th century!

Over time, landmark languages like Fortran and COBOL emerged. These languages paved the way for the technological revolutions that would follow. With the evolution of languages also came new paradigms: first Procedural, then Object-Oriented, and more recently, Functional.

Today, we’re in a modern era dominated by web, mobile, and cloud programming. Every swipe on your phone or online purchase has lines and lines of code working behind the scenes.

Programming today

Programming is the engine of our modern society. From apps for ordering food to advanced artificial intelligence systems aiding medical research, programming is everywhere.

Beyond simplifying our daily lives, programming has a profound societal impact. It has enabled progress in automation, data analysis, and entertainment. And what’s even more exhilarating is that we’re just scratching the surface. With upcoming prospects on artificial intelligence, quantum computing and the Internet of Things (IoT), who knows what marvels await us in the programming world?

More articles

1 - The Computer

In today’s digital age, where electronic gadgets seamlessly integrate into our daily lives, understanding the bedrock upon which these marvels stand becomes not just an academic interest but a societal necessity. As we embark on this enlightening voyage into the heart of computers, we aim to demystify the intricate dance between the physical and the abstract, between the tangible hardware and the intangible software.

To the uninitiated, a computer might seem like a mere box—perhaps sometimes sleek and shiny—but a box nonetheless. Yet, within this “box” lies a universe of complexity and coordination.

Hardware represents the physical components of a computer: the Central Processing Unit (CPU) which is often likened to the brain of the system, the Random Access Memory (RAM) acting as a temporary storage while tasks are underway, storage devices that retain data, and peripherals like keyboards, mice, and monitors.

On the other side of this duality is software, a set of instructions that guides the hardware. There are various types of software, from system software like the operating system (OS), which coordinates the myriad hardware components, to application software that allows users to perform specific tasks, such as word processing or gaming.

The role of the operating system is pivotal. It acts as a bridge, translating user commands into instructions that the hardware can execute. If the hardware were an orchestra, the OS would be its conductor, ensuring each instrument plays its part harmoniously.

The binary system: decoding the language of machines

Human civilizations have developed numerous numbering systems over the millennia, but computers, with their logical circuits, have settled on the binary system. But why binary? Simply put, at the most foundational level, a computer’s operation is based on switches (transistors) that can be either ‘on’ or ‘off’, corresponding naturally to the binary digits, or ‘bits’, 1 and 0 respectively.

In this binary realm, a bit is the smallest data unit, representing a single binary value. A byte, comprising 8 bits, can represent 256 distinct values, ranging from \(00000000\) to \(11111111\). This binary encoding isn’t restricted to numbers; it extends to text, images, and virtually all forms of data. For instance, in ASCII encoding, the capital letter ‘A’ is represented as \(01000001\).

In a following post we’ll describe in more details the binary system and introduce another system used a lot in relations to computers, the hexadecimal.

Memory and Storage: the sanctuaries of data

The concepts of memory and storage are pivotal in understanding computer architecture. Though sometimes used interchangeably in colloquial parlance, their roles in a computer system are distinct.

Memory, particularly RAM, is volatile, meaning information stored is lost once the computer is turned off. RAM serves as the computer’s “workspace”, temporarily storing data and instructions during operations. There are various RAM types, with DRAM and SRAM being the most prevalent.

Contrastingly, Read-Only Memory (ROM) is non-volatile, used predominantly to store firmware—software intrinsically linked to specific hardware, requiring infrequent alterations.

In terms of data storage, devices like hard drives, SSDs, and flash drives offer permanent data retention. These storage mechanisms are part of the memory hierarchy, which ranges from the swift but limited cache memory to the expansive but slower secondary storage.

References

- Patterson, D. & Hennessy, J. (2014). Computer Organization and Design. Elsevier.

- Silberschatz, A., Galvin, P. B., & Gagne, G. (2009). Operating System Concepts. John Wiley & Sons.

- Tanenbaum, A. (2012). Structured Computer Organization. Prentice Hall.

- Brookshear, J. G. (2011). Computer Science: An Overview. Addison-Wesley.

- Jacob, B., Ng, S. W., & Wang, D. T. (2007). Memory Systems: Cache, DRAM, Disk. Morgan Kaufmann.

- Siewiorek, D. P. & Swarz, R. S. (2017). Reliable Computer Systems: Design and Evaluation. A K Peters/CRC Press.

Cheers for making it this far! I hope this journey through the programming universe has been as fascinating for you as it was for me to write down.

We’re keen to hear your thoughts, so don’t be shy – drop your comments, suggestions, and those bright ideas you’re bound to have.

Also, to delve deeper than these lines, take a stroll through the practical examples we’ve cooked up for you. You’ll find all the code and projects in our GitHub repository learn-software-engineering/examples.

Thanks for being part of this learning community. Keep coding and exploring new territories in this captivating world of software!

2 - Numerical Systems

Every day, we’re surrounded by numbers. From the alarm clock’s digits waking us up in the morning to the price of our favourite morning coffee. But, have you ever stopped to ponder the essence of these numbers? In this article, we will dive deep into the captivating world of numbering systems, unravelling how one number can have myriad representations depending on the context.

The decimal system: the bedrock of our daily life

From a tender age, we’re taught to count using ten digits: 0 through 9. This system, known as the decimal system, underpins almost all our mathematical and financial activities, from basic arithmetic to calculating bank interests. Its roots trace back to our anatomy: the ten fingers on our hands, making it the most intuitive and natural system for us. Yet, its true charm emanates from its positional nature.

To grasp this concept, let’s dissect the number 237:

- The rightmost digit (7) stands for the units’ position. That is, \(7 \times 10^0\) (any number raised to the power of 0 is 1). Therefore, its value is simply 7.

- The middle digit (3) represents the tens’ position, translating to \(3 \times 10^1 = 3 \times 10 = 30\).

- The leftmost digit (2) is in the hundreds’ position, decoding to \(2 \times 10^2 = 2 \times 100 = 200\).

When these values are combined,

$$2 \times 10^2 + 3 \times 10^1 + 7 \times 10^0 = 200 + 30 + 7 = 237$$

The binary system: computers’ coded language

While the decimal system reigns supreme in our everyday lives, the machines we use daily, from our smartphones to computers, operate in a starkly different realm: the binary world. In this system, only two digits exist: 0 and 1. It might seem restrictive at first glance, but this system is the essence of digital electronics. Digital devices, with their billions of transistors, operate using these two states: on (1) and off (0).

Despite its apparent simplicity, the binary system can express any number or information that the decimal system can. For instance, the decimal number 5 is represented as 101 in binary.

Binary, with its ones and zeros, operates in a manner akin to the decimal system, but instead of powers of 10, it uses powers of 2.

Consider the binary number 1011:

- The rightmost bit denotes \(1 \times 2^0 = 1\).

- The subsequent bit stands for \(1 \times 2^1 = 2\).

- Next up is \(0 \times 2^2 = 0\).

- The leftmost bit in this number signifies \(1 \times 2^3 = 8\).

Thus, 1011 in binary translates to the following in the decimal system:

$$1011 = 1 \times 2^3 + 0 \times 2^2 + 1 \times 2^1 + 1 \times 2^0 = 8 + 0 + 2 + 1 = 11$$

Hexadecimal system: bridging humans and machines

While the binary system is perfect for machines, it can get a tad cumbersome for us, especially when dealing with lengthy binary numbers. Here is where the hexadecimal system, employing sixteen distinct digits: 0-9 and A-F, with A representing 10, B as 11, and so forth, up to F, which stands for 15 comes to help.

Hexadecimal proves invaluable as it offers a more compact way to represent binary numbers. Each hexadecimal digit corresponds precisely to four binary bits. For instance, think of the binary representation of the number 41279 and notice how the hexadecimal system achieves a more succinct representation:

$$41279 = 1010 0001 0011 1111 = A13F$$

But the hexadecimal system is more than just a compressed representation of binary numbers; it’s a positional numbering system like decimal or binary but based on 16 instead of 10 or 2. Let’s see how to derive the decimal representation of the example number (A13F):

- The rightmost digit represents \(F \times 16^0 = 15 \times 16^0 = 15\).

- The subsequent one stands for \(3 \times 16^1 = 48\).

- The next digit denotes \(1 \times 16^2 = 256\).

- The leftmost digit in this number signifies \(A \times 16^3 = 10 \times 16^3 = 40960\).

Therefore, A13F in hexadecimal translates to the following in the decimal system:

$$A13F = A \times 16^3 + 1 \times 16^2 + 3 \times 16^1 + F \times 16^0 = 10 \times 4096 + 1 \times 256 + 3 \times 16 + 15 \times 1 = 40960 + 256 + 48 + 15 = 41279$$

Conclusion

Numbering systems are like lenses through which we perceive and understand the world of mathematics and computing. Although the decimal system may be the linchpin of our daily existence, it’s crucial to appreciate and comprehend the binary and hexadecimal systems, especially in this digital age.

So, the next time you’re in front of your computer or using an app on your smartphone, remember that behind that user-friendly interface, a binary world is in full swing, with the hexadecimal system acting as a translator between that realm and us.

References

- Ifrah, G. (2000). The Universal History of Numbers. London: Harvill Press.

- Tanenbaum, A. (2012). Structured Computer Organization. New Jersey: Prentice Hall.

- Knuth, D. (2007). The Art of Computer Programming: Seminumerical Algorithms. California: Addison-Wesley.

Cheers for making it this far! I hope this journey through the programming universe has been as fascinating for you as it was for me to write down.

We’re keen to hear your thoughts, so don’t be shy – drop your comments, suggestions, and those bright ideas you’re bound to have.

Also, to delve deeper than these lines, take a stroll through the practical examples we’ve cooked up for you. You’ll find all the code and projects in our GitHub repository learn-software-engineering/examples.

Thanks for being part of this learning community. Keep coding and exploring new territories in this captivating world of software!

3 - Boolean Logic

In life, we often seek certainties. Will it rain tomorrow, true or false? Is a certain action right or wrong? This dichotomy, this division between two opposing states, lies at the very core of a fundamental branch of mathematics and computer science: Boolean logic.

Named in honour of George Boole, a 19th-century English mathematician, Boolean logic is a mathematical system that deals with operations resulting in one of two possible outcomes: true or false, typically represented as 1 and 0, respectively. In his groundbreaking work, “An Investigation of the Laws of Thought,” Boole laid the foundations for this logic, introducing an algebraic system that could be employed to depict logical structures.

Boolean operations

Within Boolean logic, several fundamental operations allow for the manipulation and combination of these binary expressions:

AND: This operation yields true (1) only if both inputs are true. For instance, if you have two switches, both need to be in the on position for a light to illuminate.

OR: It returns true if at least one of the inputs is true. Using the switch analogy, as long as one of them is in the on position, the light will shine.

NOT: This unary operation (accepting only one input) simply inverts the input value. Provide it with a 1, and you’ll get a 0, and vice versa.

NAND (NOT AND): It’s the negation of AND. It only returns false if both inputs are true.

NOR (NOT OR): The negation of OR. It yields true only if both inputs are false.

XOR (Exclusive OR): It returns true if the inputs differ. If both are the same, it returns false.

XNOR (Exclusive NOR): The inverse of XOR. It yields true if both inputs are the same.

Why is this logic important in computing and programming?

Modern computing, at its core, is all about bit manipulation (those 1s and 0s we’ve mentioned). Every operation a computer undertakes, from basic arithmetic to rendering intricate graphics, involves Boolean operations at some level.

In programming, Boolean logic is used in control structures, such as conditional statements (if, else) and loops, allowing programs to make decisions based on specific conditions.

Truth Tables: mapping Boolean logic

A truth table graphically represents a Boolean operation. It lists every possible input combination and displays the operation’s result for each combination.

For instance:

| A | B | A AND B | A OR B | A XOR B | A NOR B | A NAND B | NOT A | A NXOR B |

|---|

| 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 1 |

| 1 | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 0 |

| 0 | 1 | 0 | 1 | 1 | 0 | 1 | 1 | 0 |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 |

Concluding thoughts

Boolean logic is more than a set of abstract mathematical rules. It’s the foundational language of machines, the code underpinning the digital age in which we live. By understanding its principles, not only do we become more proficient in working with technology, but we also gain a deeper appreciation of the structures supporting our digital world.

References

- Boole, G. (1854). An Investigation of the Laws of Thought. London: Walton and Maberly.

- Tanenbaum, A. (2012). Structured Computer Organization. New Jersey: Prentice Hall.

- Minsky, M. (1967). Computation: Finite and Infinite Machines. New Jersey: Prentice-Hall.

Cheers for making it this far! I hope this journey through the programming universe has been as fascinating for you as it was for me to write down.

We’re keen to hear your thoughts, so don’t be shy – drop your comments, suggestions, and those bright ideas you’re bound to have.

Also, to delve deeper than these lines, take a stroll through the practical examples we’ve cooked up for you. You’ll find all the code and projects in our GitHub repository learn-software-engineering/examples.

Thanks for being part of this learning community. Keep coding and exploring new territories in this captivating world of software!

4 - Set Up your Development Environment

Venturing into the world of programming might seem like a Herculean task, especially when faced with the initial decision: Where to begin? This article will guide you through the essential steps to set up your programming environment, ensuring a solid foundation for your coding journey.

Choosing a programming language

Choosing a programming language is the first and perhaps the most crucial step in the learning process. Several factors to consider when selecting a language include:

- Purpose: What do you want to code for? If it’s web development, JavaScript or PHP might be good options. If you’re into data science, R or Python might be more appropriate.

- Community: A language with an active community can be vital for beginners. A vibrant community usually means more resources, tutorials, and solutions available online.

- Learning curve: Some languages are easier to pick up than others. It’s essential to pick one that matches your experience level and patience.

- Job opportunities: If you’re eyeing a career in programming, researching the job market demand for various languages can be insightful.

While there are many valuable and potent languages, for the purpose of this course, we’ve chosen Python. This language is renowned for its simplicity and readability, making it ideal for those just starting out. Moreover, Python boasts an active community and a wide range of applications, from web development to artificial intelligence.

Installing Python

For Windows users:

- Download the installer:

- Run the installer:

- Once the download is complete, locate and run the installer

.exe file. - Make sure to check the box that says “Add Python to PATH” during installation. This step is crucial for making Python accessible from the Command Prompt.

- Follow the installation prompts.

- Verify installation:

- Open the Command Prompt and type:

- This should display the version of Python you just installed.

For Mac users:

- Download the installer:

- Run the installer:

- Once the download is complete, locate and run the

.pkg file. - Follow the installation prompts.

- Verify installation:

- Open the Terminal and type:

- This should display the version of Python you just installed.

For Linux (Ubuntu/Debian) users:

- Update packages:

- Install Python:

- Verify installation:

- After installation, you can check the version of Python installed by typing:

Integrated Development Environments (IDEs)

An IDE is a tool that streamlines application development by combining commonly-used functionalities into a single software package: code editor, compiler, debugger, and more. Choosing the right IDE can make the programming process more fluid and efficient.

When evaluating IDEs, consider:

- Language compatibility: Not all IDEs are compatible with every programming language.

- Features: Some IDEs offer features like auto-completion, syntax highlighting, and debugging tools.

- Extensions and plugins: Being able to customize and extend your IDE through plugins can be extremely beneficial.

- Price: There are free and paid IDEs. Evaluate whether the additional features of a paid IDE justify its cost.

For this course, we’ve selected Visual Studio Code (VS Code). It’s a popular IDE that’s free and open-source. It’s known for its straightforward interface, a vast array of plugins, and its capability to handle multiple programming languages. Its active community ensures regular updates and a plethora of learning resources.

Installing Visual Studio Code

For Windows users:

- Download the installer:

- Run the installer:

- Once the download is complete, locate and run the installer

.exe file. - Follow the installation prompts, including accepting the license agreement and choosing the installation location.

- Launch VS Code:

- After installation, you can find VS Code in your Start menu.

- Launch it, and you’re ready to start coding!

For Mac users:

- Download the installer:

- Install VS Code:

- Once the download is complete, open the downloaded

.zip file. - Drag the Visual Studio Code

.app to the Applications folder, making it available in the Launchpad.

- Launch VS Code:

- Use Spotlight search or navigate to your Applications folder to launch VS Code.

For Linux (Ubuntu/Debian) users:

- Update packages and install dependencies:

sudo apt update

sudo apt install software-properties-common apt-transport-https wget

- Download and install the key:

wget -q https://packages.microsoft.com/keys/microsoft.asc -O- | sudo apt-key add -

- Add the VS Code repository:

sudo add-apt-repository "deb [arch=amd64] https://packages.microsoft.com/repos/vscode stable main"

- Install Visual Studio Code:

sudo apt update

sudo apt install code

- Launch VS Code:

- You can start VS Code from the terminal by typing

code or find it in your list of installed applications.

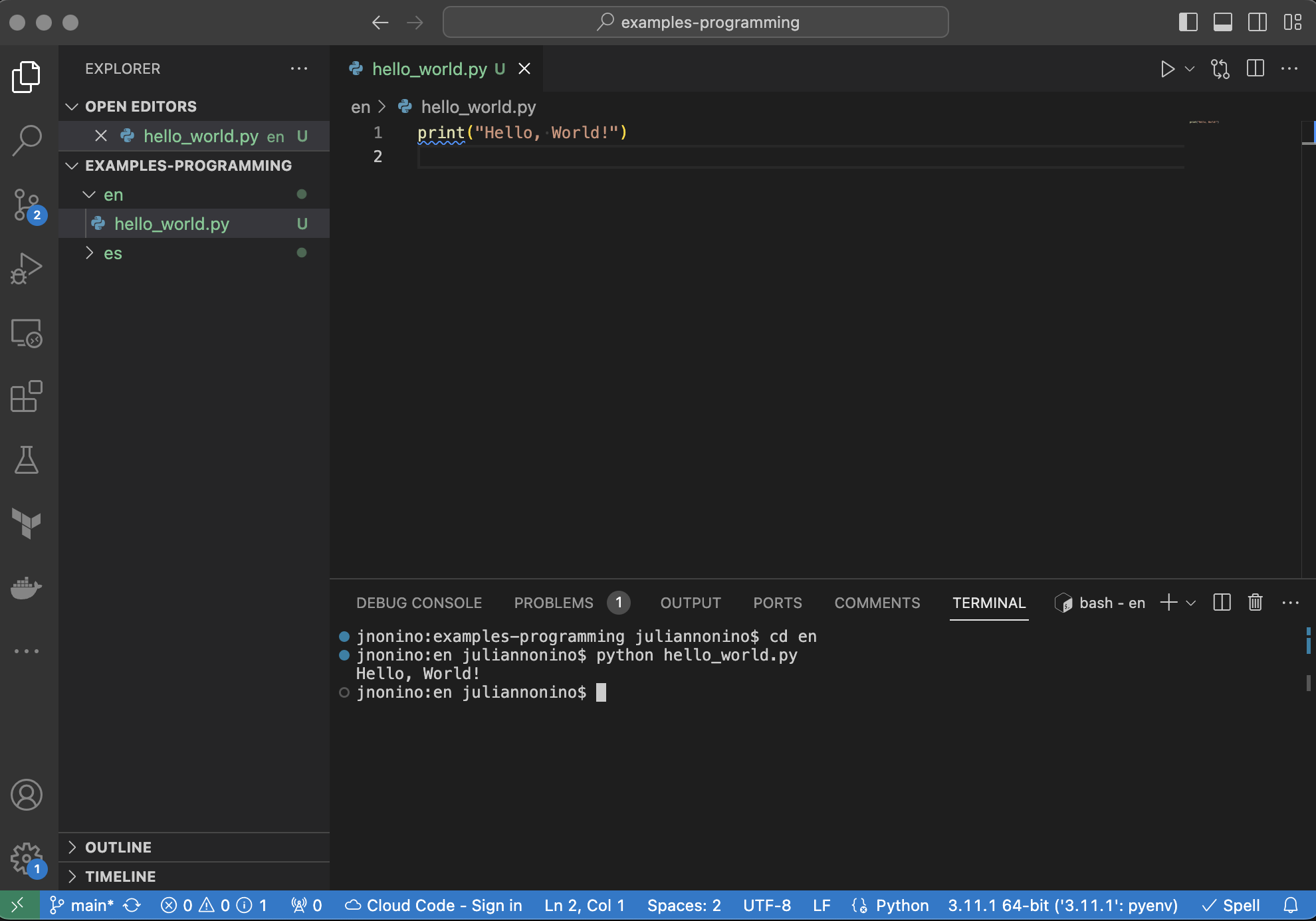

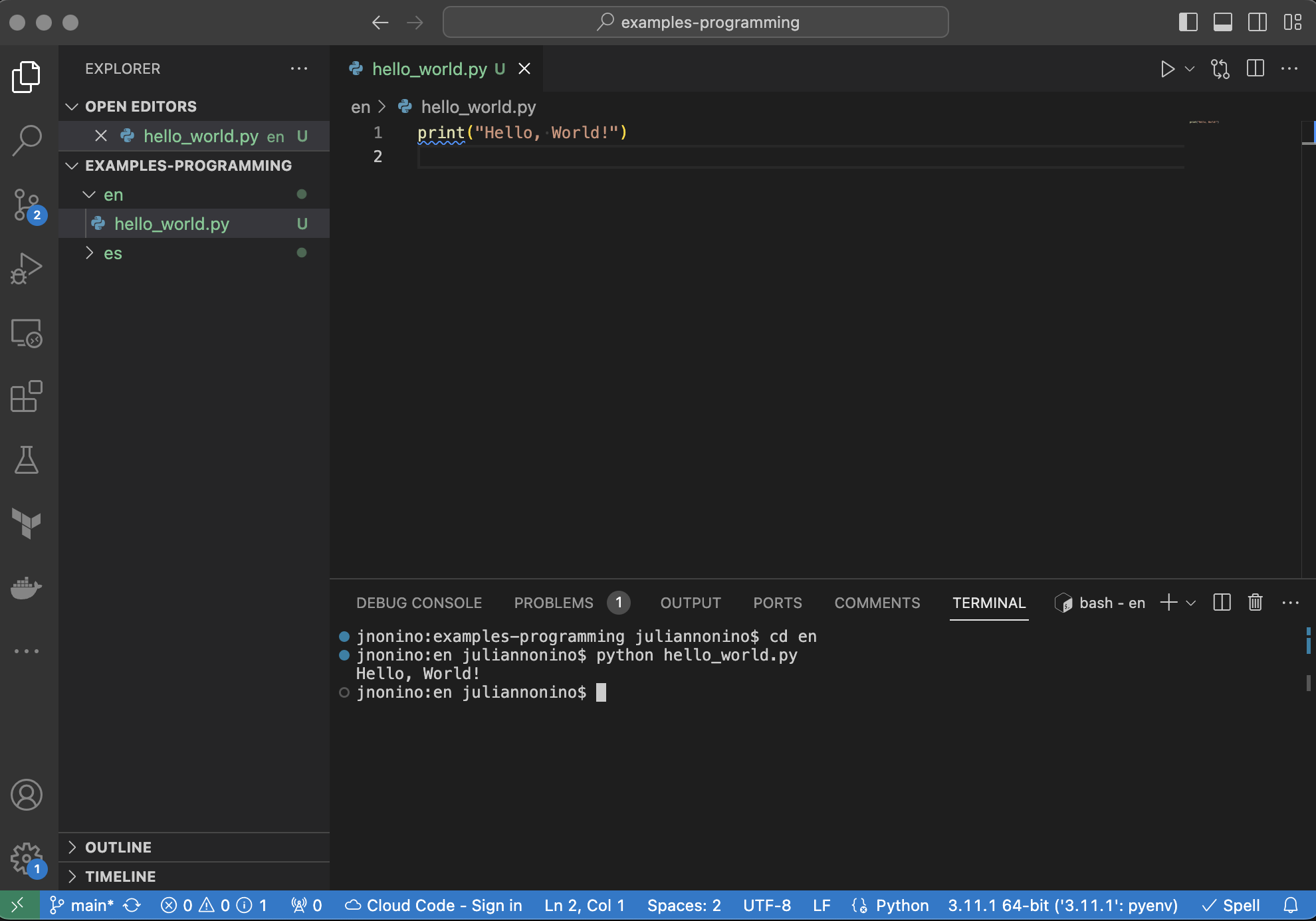

Write and execute your first program

Once you’ve set up your programming environment, it’s time to dive into coding.

Hello, World!

This is arguably the most iconic program for beginners. It’s simple, but it introduces you to the process of writing and executing code.

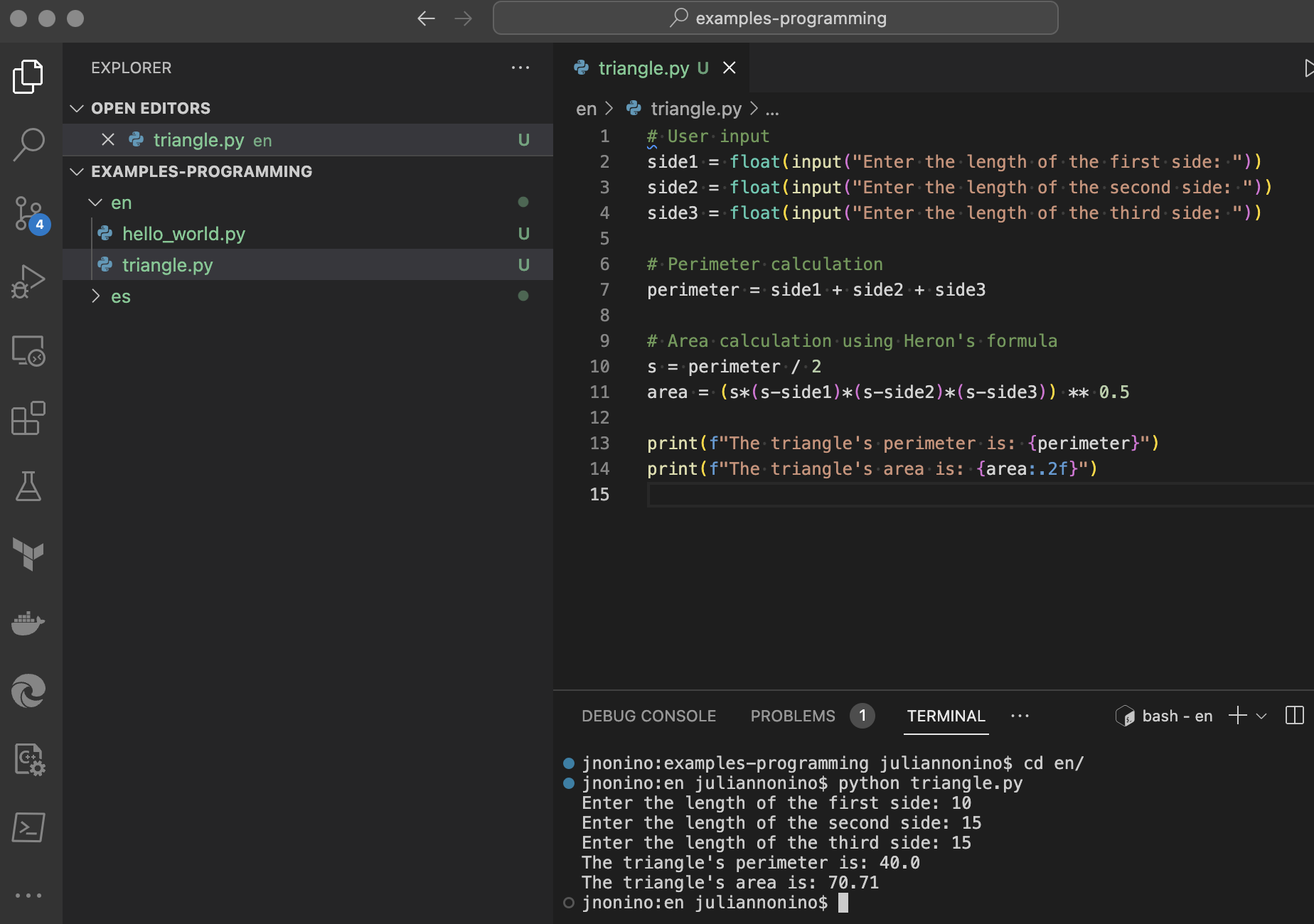

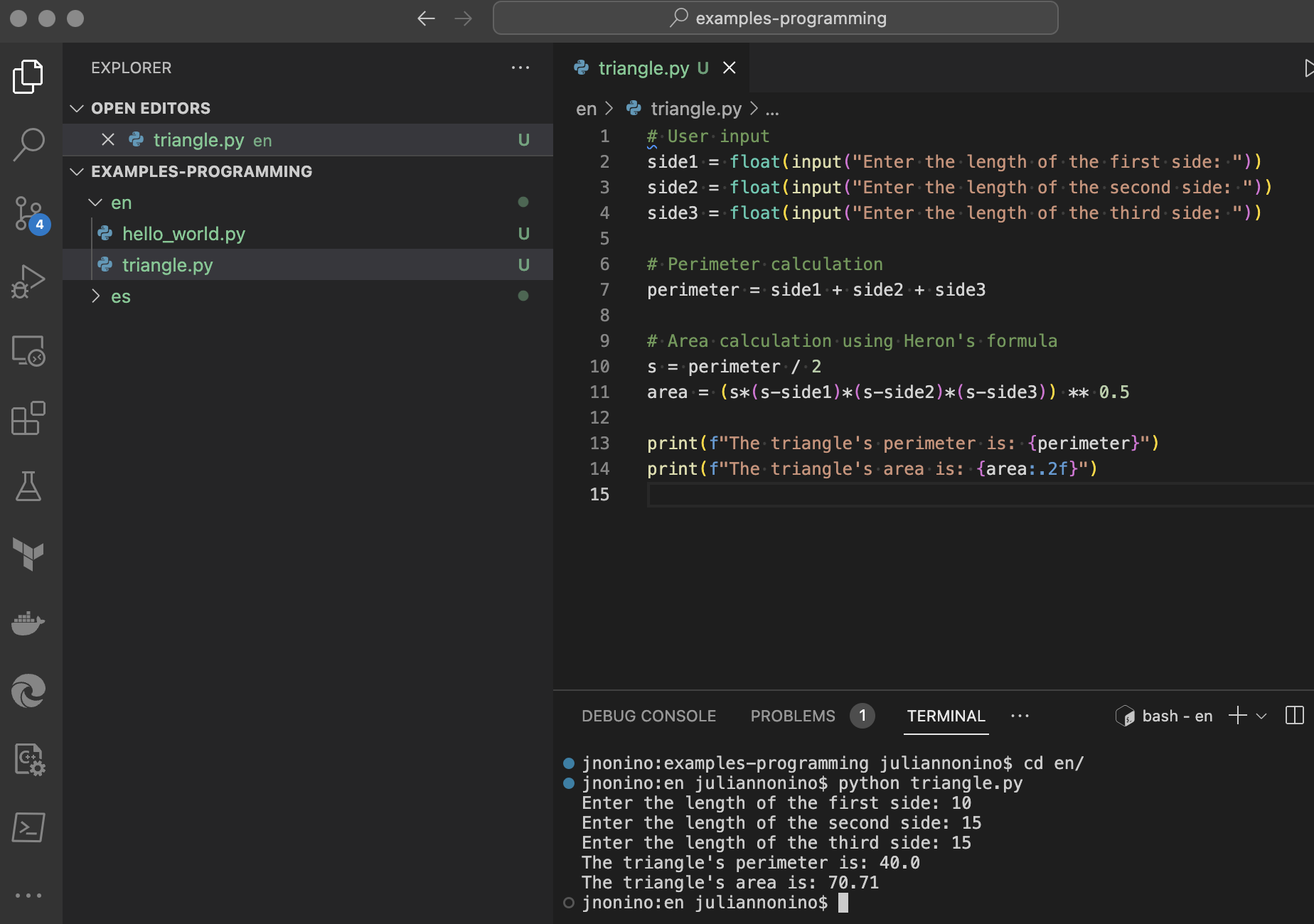

Triangle area and perimeter calculation

This program is a tad more intricate. It doesn’t just print out a message; it also performs mathematical calculations.

# User input

side1 = float(input("Enter the length of the first side: "))

side2 = float(input("Enter the length of the second side: "))

side3 = float(input("Enter the length of the third side: "))

# Perimeter calculation

perimeter = side1 + side2 + side3

# Area calculation using Heron's formula

s = perimeter / 2

area = (s*(s-side1)*(s-side2)*(s-side3)) ** 0.5

print(f"The triangle's perimeter is: {perimeter}")

print(f"The triangle's area is: {area:.2f}")

Conclusion

Setting up a programming environment might appear daunting at first, but with the right tools and resources, it becomes a manageable and rewarding task. We hope this article provided you with a solid foundation to kickstart your programming journey. Happy coding!

References

- Lutz, M. (2013). Learning Python. O’Reilly Media.

- Microsoft. (2020). Visual Studio Code Documentation. Microsoft Docs.

Cheers for making it this far! I hope this journey through the programming universe has been as fascinating for you as it was for me to write down.

We’re keen to hear your thoughts, so don’t be shy – drop your comments, suggestions, and those bright ideas you’re bound to have.

Also, to delve deeper than these lines, take a stroll through the practical examples we’ve cooked up for you. You’ll find all the code and projects in our GitHub repository learn-software-engineering/examples.

Thanks for being part of this learning community. Keep coding and exploring new territories in this captivating world of software!